Data quality tools for PostgreSQL

Data quality tools measure how good and useful a data set is to serve its intended purpose. High quality data can lead to better decision-making and faster insights as well as reduce the costs of identifying and dealing with bad data in the system. It can also save time and allow companies to focus on more important tasks.

Dataedo

Dataedo provides users with a comprehensive tool for maintaining, tracking, and improving data quality. Access selection of 150+ built-in data quality rules or define bespoke data quality rules using SQL queries. Schedule data quality checks for continuous monitoring. Use a DQ dashboard for quick issue detection and trend analysis.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

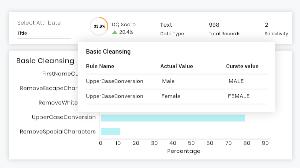

Global IDs Data Quality Suite

Global IDs Data Quality Suite ensures the quality of the data by establishing control points and read-only quality controls at the database level. It continuously monitors quality metrics, while it also automates control generation for critical data elements across all kinds of data sources.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

Trillium Quality

Trillium Quality rapidly transform high-volume, disconnected data into trusted and actionable business insights with scalable enterprise data quality. It has been designed to run natively in cloud or on-premises big data environments, ensuring your business information is integrated, fit-for-purpose, and accessible across the enterprise, regardless of volume.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

SAS Data Quality

SAS Data Quality is a comprehensive tool that meets all the data quality requirements of a business. It makes it easy to profile and identify problems, preview data, and set up repeatable processes to maintain a high level of data quality.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

Open Source Data Quality and Profiling

Open Source Data Quality and Profiling tool is an open source project dedicated to data quality and data preparation solutions. This tool is developing high performance integrated data management platform which will seamlessly do data integration, data profiling, data quality, data preparation, dummy data creation, meta data discovery, anomaly discovery, data cleansing, reporting, and analytic.

| Commercial: | Free |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

Talend Data Quality

As an integral part of Talend Data Fabric, Talend Data Quality profiles, cleans, and masks data in real time. It lets you quickly identify data quality issues, discover hidden patterns, and spot anomalies through summary statistics and graphical representations.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

DQLabs

DQLabs.ai is an augmented data quality platform to manage your entire data quality life cycle. With ML and self-learning capabilities, organizations can measure, monitor, remediate and improve data quality across any type of data – all in one agile, innovative self-service platform.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

Trifacta

Trifacta is an open and interactive cloud platform for data engineers and analysts to collaboratively profile, prepare, and pipeline data for analytics and machine learning. It presents automated visual representations of data based upon its content in the most compelling visual profile. In addition, every profile is completely interactive, allowing the user to simply select certain elements of the profile to prompt transformation suggestions.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

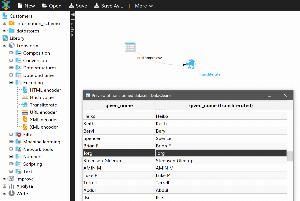

DataCleaner

DataCleaner is a premier open source data quality solution. The heart of DataCleaner is a strong data profiling engine for discovering and analyzing the quality of your data. Find the patterns, missing values, character sets, and other characteristics of your data values.

| Commercial: | Free |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

BigID

BigID offers actionable data intelligence by empowering you to find, classify, catalog, profile and get context for all your data in the cloud or data-center, at-rest or in-motion, structured or unstructured at petabyte scale. It lets you identify sensitive data using hundreds of pre-built NLP, Deep Learning, and pattern classifiers.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

Infogix Data360 DQ+

Infogix Data360 DQ+ is an enterprise data quality solution that automates data quality checks across the entire data supply chain from the time information enters your organization throughout its whole journey. You can score the likelihood of possible inaccuracies based on historical data characteristics and issue reconciliations by leveraging machine learning algorithms.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

Aperture Data Studio

Aperture Data Studio combines self-service data quality with globally curated data sets into a single data quality platform. This empowers modern data practitioners to build a consistent, accurate, and holistic view of their consumer data quickly and effortlessly. It lets you set custom workflows for data profiling, cleansing, validation, transformation, enrichment, and deduplication.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

TIBCO Clarity

TIBCO Clarity is data preparation, profiling, and cleansing tool. You can use TIBCO Clarity to discover, profile, cleanse, and standardize raw data collected from disparate sources, and provide good quality data for accurate analysis and intelligent decision-making.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

Qualdo

Qualdo is a single, centralized tool to measure, monitor, and improve data quality from all your cloud database management tools and data silos. It lets you deploy powerful auto-resolution algorithms to track and isolate critical data issues. Take advantage of robust reports and alerts to manage your enterprise regulatory compliance.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

RightData

RightData is a self-service suite of applications that helps you achieve Data Quality Assurance, Data Integrity Audit and Continuous Data Quality Control with automated validation and reconciliation capabilities.

| Commercial: | Commercial |

|---|---|

| Data cleansing: |

|

| Data Discovery & Search: |

|

| Data Profiling: |

|

| Data standarization: |

|

| Free edition: |

|

Data quality tools are the scripts that support the data quality processes and they heavily rely on identification, understanding, and correction of data errors. Data quality tool enhances the accuracy of the data and helps to ensure good data governance all across the data-driven cycle.

The common functions that each data quality tools must perform are:

• Data profiling

• Data monitoring

• Parsing

• Standardization

• Data enrichment

• Data cleansing

Choosing the right data quality tool is essential and impacts the final results. To help you with the right selection, we prepared a list of tools that will assist you with maintaining a high level of data quality.

SQL Server

SQL Server

Oracle

Oracle

MySQL

MySQL

PostgreSQL

PostgreSQL

Amazon Redshift

Amazon Redshift

Azure SQL Database

Azure SQL Database

DBT

DBT

Google Big Query

Google Big Query

IBM DB2

IBM DB2

MariaDB

MariaDB

SAP HANA

SAP HANA

Snowflake

Snowflake

SQLite

SQLite

Teradata

Teradata

Vertica

Vertica